What Happens When Your School Thinks AI Helped You Cheat

The education system has an AI problem. As students have started using tools like ChatGPT to do their homework, educators have deployed their own AI tools to determine if students are using AI to cheat.

But the detection tools, which are largely effective, do flag false positives roughly 2% of the time. For students who are falsely accused, the consequences can be devastating.

On today’s Big Take podcast, host Sarah Holder speaks to Bloomberg’s tech reporter Jackie Davalos about how students and educators are responding to the emergence of generative AI and what happens when efforts to crack down on its use backfire.

Read more: AI Detectors Falsely Accuse Students of Cheating—With Big Consequences

In 1 playlist(s)

Big Take

The Big Take from Bloomberg News brings you inside what’s shaping the world's economies with the sma…Social links

Follow podcast

Recent clips

Special Report: US and Israel Strike Iran

11:55

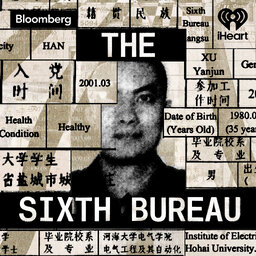

The Sixth Bureau Episode 4: The Duck Analogy

33:40

How the ‘Power Game’ Is Reshaping Venezuela

18:05

Big Take

Big Take