The Dark Side of TikTok’s Algorithm

Bloomberg senior investigative reporter Olivia Carville is back with her latest reporting on TikTok. She explains how the superpopular app’s algorithm can serve up a stream of anxiety and despair to teens, including videos about eating disorders and suicide. And Jennifer Harriger, a professor of psychology at Pepperdine University, joins to talk about the effect these messages can have on teens and young adults.

Read the story: TikTok’s Algorithm Keeps Pushing Suicide to Vulnerable Kids

Listen to The Big Take podcast every weekday and subscribe to our daily newsletter: https://bloom.bg/3F3EJAK

Have questions or comments for Wes and the team? Reach us at bigtake@bloomberg.net.

This podcast is produced by the Big Take Podcast team: Supervising Producer: Vicki Vergolina, Senior Producer: Kathryn Fink, Producers: Mo Barrow, Rebecca Chaisson, Michael Falero and Federica Romaniello, Associate Producers: Sam Gebauer and Zaynab Siddiqui. Sound Design/Engineers: Raphael Amsili and Gilda Garcia.

In 1 playlist(s)

Big Take

The Big Take from Bloomberg News brings you inside what’s shaping the world's economies with the sma…Social links

Follow podcast

Recent clips

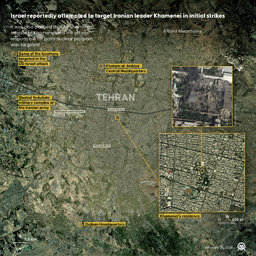

After Months of Succession Planning, Who’s Leading Iran?

14:56

The Escalating Conflict With Iran

15:10

Former Goldman Sachs CEO Lloyd Blankfein Says the Market Is Due For a Reckoning

36:40

Big Take

Big Take